Artificial Intelligence: The next technological revolution

Dear investors,

The invention of steam engines in the late 18th century ushered in a period of great advances in economic productivity that became known as the first industrial revolution. This rapid progress was interpreted simultaneously in two completely opposite ways: on the one hand, a utopian hope that productivity increases would be so great that people would work less and have more free time to enjoy their lives; on the other, the fear that machines would replace workers, throwing them into unemployment and inevitable poverty. In fact, this second interpretation gave rise to Luddism, a movement of workers who invaded factories and destroyed machines in an attempt to prevent technological advances that, supposedly, would lead to their ruin.

Jumping forward two centuries, there's a somewhat similar discussion today about the impact artificial intelligence (AI) will have on the world. Enthusiasts see the potential for a new era of accelerated productivity growth and the consequent production of material wealth for humanity to enjoy, while others see a major threat, going so far as to predict an apocalyptic future where machines controlled by superintelligent AIs conclude that the world would be better off without the human race and decide to eliminate it.

We are not experts on the subject, but it is obvious that the impact of this technology will be substantial, so we believe it is pertinent to monitor the matter closely, both to seek to identify investments that could benefit from the advancement of AI and to remain alert to the potential risk that the transformation ahead may pose to certain business segments.

We will share our current vision, certainly incomplete and imperfect, on the potential impacts of AI, striving to be as pragmatic and realistic as we currently can. Any criticism, disagreements, or additions are welcome.

What is Artificial Intelligence today?

Current AI tools are based on machine learning, a branch of computational statistics focused on developing algorithms capable of self-adjusting their parameters through a large number of iterations, creating statistical models capable of making predictions without using a pre-programmed formula. In practice, these algorithms analyze millions of data pairs (e.g., a text describing an image and the image being described) and adjust their parameters to best fit this enormous data set. After this calibration, the algorithm is able to analyze just one element of a similar data pair and, from this, predict the other element (e.g., from a text describing an image, create an image that fits the description).

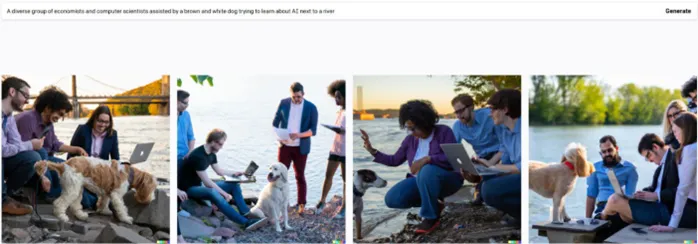

Without delving into the philosophical debate about whether this prediction mechanism based on statistical models is equivalent to human intelligence, the fact is that these models perform tasks previously impossible for software quickly, cheaply, and better than most humans could. For example, an AI called DALL-E generated the following images from the description: "a diverse group of economists and computer scientists, accompanied by a white and brown dog, trying to learn about AI near a river."

Note that the images are not photographs. They are entirely created by AI and have certain imperfections that a skilled human painter wouldn't make (see people's hands), but they are produced very quickly, at a low cost, and using a newly released technology that certainly still has a lot of room for improvement.

Another notable example is Chat GPT, a chatbot launched in November 2022 that boasts a "near-human" ability to converse (in multiple languages) and a certainly superhuman ability to answer questions on a wide range of topics, drawing its "knowledge" from the vast amount of written content available on the internet. The tool still has some limitations. For example, it sometimes produces false information, and the quality of the written texts, while quite reasonable, doesn't seem to surpass human ability (compared to erudite people). In any case, it's an undeniably impressive technology, especially considering its few months of widespread use.

Interestingly, the ideas behind these algorithms aren't new. They emerged around the 1960s and went through several cycles of enthusiasm and disillusionment in the scientific community. What made these new solutions gain popularity and notoriety was the advancement of computing power, which allowed AI algorithms to use sufficiently large data sets to achieve accuracy rates good enough to become truly useful.

Potential Uses of Artificial Intelligence

There are software programs designed to completely automate tasks that could be performed by humans, and software designed entirely to enhance human productivity in certain tasks (e.g., the Office suite). Artificial Intelligence is a new class of software, also used for both task automation and human capacity enhancement. There is, however, an important difference compared to traditional software.

Non-AI software can only perform activities whose step-by-step process can be formally described and programmed in logical expressions. Identifying whether there's a dog in a photo, for example, is an impossible task for traditional software, due to the difficulty of describing what a dog is in logical and mathematical terms. In contrast, an AI tool can be trained to recognize dogs when shown millions of examples of dog photos. After this calibration, it will be able to recognize dogs in new photos as well as people, and much faster.

This ability to recognize patterns in images alone is already extremely valuable. For example, it's very likely that doctors who currently perform diagnostic imaging exams (X-rays, ultrasounds, MRIs, etc.) will be replaced by AI software that will issue reports faster, with a higher accuracy rate, and at a fraction of the current cost. On the one hand, this may be seen as a threat to the role of radiologists, but it will also enhance the work of other physicians, who will receive almost instantaneously the reports they need to determine how to treat their patients.

Another very likely use is for AIs to replace human-based customer service. Chatbots are already widely used in customer service today (you've certainly interacted with one), but most of them are so limited that, in most cases, the customer is still transferred to a human. Soon, AI tools will reach a level sufficient to offer better service than a human, with complete knowledge of all information related to the products and services offered by a given company and infinite patience to serve any customer cordially.

The threat of mass unemployment

Current AI technology enables the creation of tools that serve a specific purpose—that is, they can become extremely efficient at performing a given task, but they are incapable of learning tasks of different natures. The great fear of those who advocate against AI is that this technology will evolve into a Generic Artificial Intelligence (GAI), software capable of learning anything that, once created, could evolve beyond human capabilities and replace humans in any cognitive function. However, nothing close to GAI exists yet, and there is considerable debate as to whether humanity will ever be capable of developing something like it.

In the absence of a super-powerful AI, it seems more likely that AI's impact will be similar to that of other disruptive technologies that have emerged throughout history. For example, before computers existed, all forms of mathematical calculation were performed manually by people. Engineering and scientific research activities employed large numbers of employees to perform calculations and review calculations, in positions seen as low-value-added. The emergence of computers completely eliminated this professional category, but it greatly increased the productivity of people who relied on calculations in their professions and in no way diminished the importance of mathematical knowledge.

This same dynamic can be applied to countless instances of technological advancement, even in completely different fields. Today, there are agricultural machines that make a single farmer as productive as hundreds of people working on a non-mechanized farm. In all cases, increasing productivity by reducing the human effort required for the task is obviously a good thing.

Another obvious fact is that technology, which since the 18th century has provoked fears among some that it might make humans obsolete, has yet to contribute much to reducing the workload required of humanity. People still spend most of their days working to support themselves, as the average standard of living has risen significantly over time, and maintaining this standard requires more human effort.

For example, no one in the last century imagined that internet access would be considered a "basic necessity," but ensuring it today requires massive fiber optic networks, a host of 24/7 data storage and transmission equipment, and billions of electronic devices for personal use. All the tasks involved in widespread internet access were completely unimaginable a century ago. As more and more products and services become part of our daily lives, new jobs emerge, and the demand for human labor continues to exist.

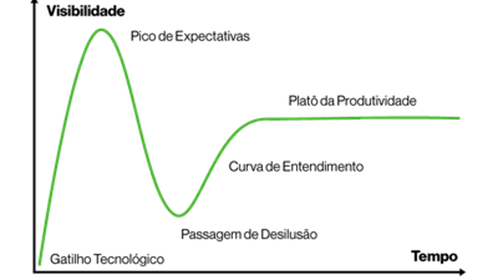

The Hype Cycle

Gartner, a major technology consulting firm, created a schematic representation of how expectations related to a new technology evolve over time. In the first phase, the technology gains attention through speculation about its potential, but there is little clarity regarding its potential use or commercial viability. In a second stage, experiments begin to be successful, and enormous expectations are created around the transformative potential that the new technology will bring to the world. Later, expectations prove unrealistic, or more difficult to achieve than initially imagined, and widespread excitement gives way to collective frustration at the unfulfilled promises. However, problems are gradually overcome, and better ways to use the new tools are discovered until the technology matures and enters a phase of productivity and realistic expectations. The graph below represents this cycle.

Today, it seems that AI is at its peak of expectations (hype). Everyone sees enormous potential in the abstract, but we still don't know to what extent this technology will evolve, how fast it will evolve, or which applications will be economically efficient enough to be widely used. Therefore, much of what is currently being said about AI is likely to contain some hint of exaggeration and speculation. New technologies typically take several years to mature and reach plateau levels of productivity, so the future of AI is still difficult to predict in concrete, detailed terms.

Impact of AI on investments

Finally, what always interests us: how can this topic influence investment decisions?

Investing in AI companies is probably a bad idea right now, because during the hype, valuations of companies at the forefront of the sector tend to be hyper-inflated, and it's very difficult to predict which company will become the ultimate leader in its segment. A great example of this is the story of Yahoo and Google. Yahoo was founded in 1994 and was the dominant search engine until the early 2000s, reaching a peak value of over $125 billion, more valuable than Ford, Chrysler, and GM combined at the time. Even so, Google, founded in 1998, beat Yahoo to become the undisputed leader among search engines. Today, OpenAI is one of the most prominent companies in the sector, thanks to the success of ChatGPT, but it's very difficult to predict whether its future will be similar to that of Yahoo or Google.

Possibly more interesting investment theses could be found by seeking opportunities among companies that supply the AI sector, following the maxim that, "in a gold rush, those who sell shovels and pickaxes make the most money." This is also not an easy task because, although it doesn't take much effort to conclude that AI depends on robust data centers to store and process massive volumes of data, it is necessary to analyze each supplier sector to determine whether they face strong competition and risks of technological disruption.

Another possibility is to look at the sectors that should benefit from the use of AI. For example, the music industry has benefited greatly from technologies that have made it possible to record audio and distribute music albums on records, tapes, CDs, and now through streaming. Some record labels have become multi-billion-dollar companies due to the scalability that technology has brought to their businesses. Likewise, AI will certainly enable some businesses to become more efficient, scalable, and profitable for their shareholders.

Just as important as identifying the companies that will benefit is understanding which businesses AI poses a risk to. New technologies can destroy companies, a fact well illustrated by the history of Kodak, founded in 1888 and for over a century a leading name in the camera and video camera market. When digital camera technology emerged in the 2000s, Kodak was slower than its competitors in developing new products and ended up falling behind. In 2012, the company declared bankruptcy.

Today, we're still more focused on detecting AI-related risks, especially for the companies in our portfolio, than on developing investment theses based on the progress of this technology, due to the still-significant uncertainty surrounding the topic. However, we believe that AI has the potential to become as revolutionary as the emergence of computers, the internet, and smartphones, and therefore, it's a topic we intend to continue monitoring closely.