Dear investors,

In April 1965, an article entitled "Cramming More Components Onto Electronic Circuits" appeared in Electronics Magazine. This publication, whose title can be understood in its full magnitude only by engineering enthusiasts, has become one of the most influential contributions in history.

The article had been written by Gordon Moore, an electronics engineer, then director of R&D at Fairchild Semiconductor, one of the future founders of Intel. In his paper, Moore predicted that the number of transistors on an integrated circuit would double on average every two years. This prediction eventually became known as Moore's Law.

The prediction was originally made for the next 10 years, but it still holds true today, nearly 60 years later. And it is thanks to her that the number of transistors that fit on a chip went from 4 to more than 16 billion today¹ and made it possible for this technology to become increasingly prevalent in our lives. At the center of the digital universe, chips, or microprocessors, are the brains behind the computers, smartphones, tablets and myriad electronic devices that form the basis of the digital economy. Without them, many of the advances we see in fields such as artificial intelligence, Big Data, Internet of Things (IoT) and cloud computing, for example, would not be possible.

Demand for chips is growing in a variety of industries, from auto to healthcare to agriculture and even space exploration. Chip shortages can have dramatic effects on manufacturing and the wider economy, an effect that was evident during the pandemic, when chip shortages had a significant impact on the auto industry. A modern vehicle has, on average, 1,500 chips. Without them, many vehicles went out of production, which is estimated to have caused the industry to lose up to ~USD 200 billion in sales in recent years. This impact is equally noticeable in the sharp increase in car prices that we have witnessed over the same period.

Considering that the technology in this industry is owned by very few companies, each one specialized in a stage of the production chain, new bottlenecks are not unthinkable and could be much more severe than those faced during the pandemic – especially considering that the company responsible for the production of almost 90% of the world's most advanced chips² (TSMC) is located in Taiwan.

In the next few paragraphs, we briefly explain the history of the industry, how it is set up today and its role in the global geopolitical landscape and implications for our investments. For those interested in going deeper into the subject, we recommend reading the book Chip War, by author Chris Miller.

What exactly is a chip

To understand how a chip works, it's helpful to start with the concept of a semiconductor.

Semiconductors are materials with electrical properties that fall somewhere between those of conductors (such as copper, which conducts electricity very well) and insulators (such as rubber, which does not conduct electricity). Silicon is the semiconductor most commonly used in chip manufacturing – it is for this element that the US region called Silicon Valley is named.

On chips, small components called transistors are created from this semiconductor. Think of the transistor as a small switch that can turn electricity on and off. This ability to control electricity is crucial to how the chip works, as this is how the binary language (code of 1s and 0s) is represented. When the transistor is “on”, a “1” is registered and when it is “off”, a “0” is registered. This series of 1s and 0s is the fundamental language that computers use to process information.

Brief history of the chip industry

The semiconductor industry has its roots in the 20th century, when the invention of the transistor at Bell Labs in 1947 changed the course of technology. This small device, which could amplify and switch electrical signals, eventually replaced the vacuum tube, which was larger, consumed more energy and was less reliable. In 1958, Jack Kilby of Texas Instruments and Robert Noyce of Fairchild Semiconductor independently created the first integrated circuit (or chip) that combined multiple transistors into a single device. This invention marked the beginning of the silicon era and the birth of the chip industry.

In the early years, the products had no commercial demand and found use in military applications. The fact that the US was lagging behind in the space race provided the necessary impetus for computing demand. Fairchilld Semiconductor received a large order from NASA for the Apollo mission (the mission that landed a man on the moon in 1969). Meanwhile, Texas Instruments received a large order from the US Air Force for a missile guidance system.

In the 1970s, companies such as Intel and AMD emerged as major players in the industry, with Intel introducing the first commercially available microprocessor, the Intel 4004, in 1971. The invention of the microprocessor led to significant advances in computing and other technologies , fueling the rise of personal computers in the 1980s.

Starting in the 1980s and continuing into the 21st century, the semiconductor industry has expanded beyond computers to include an ever-increasing range of applications, including mobile phones, Internet of Things (IoT) devices, and artificial intelligence systems. . In addition, a global division of labor has emerged, with some companies, such as Intel, designing and manufacturing their own chips, while others, such as Apple and Qualcomm, designing chips but outsourcing manufacturing to companies such as TSMC.

Current industry overview

Considering the industry's importance, it's surprising how concentrated it is.

Companies like Apple, Nvidia and AMD play an important role, but they are only responsible for designing the chip. To develop this project, these companies depend on software whose technology is dominated by only 4 companies (3 Americans and 1 German) that, together, hold 90% of the global market: Synopsis, Cadence, Ansys and Siemens.

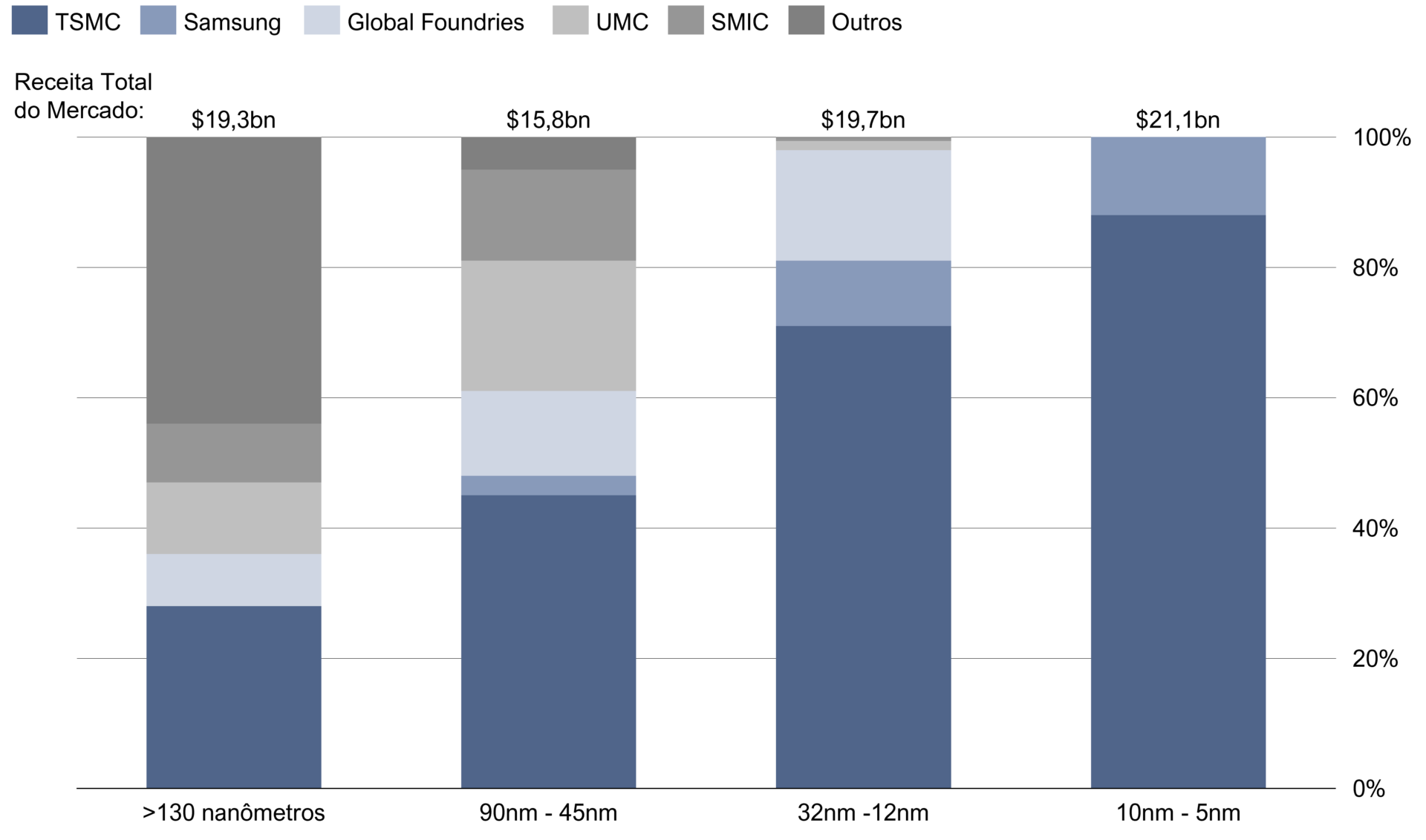

When the design is completed, it is sent to one of the chip makers. Depending on the complexity of the product, there are only 2 or 3 companies in the world capable of producing them: TSMC (Taiwan), Samsung (South Korea) and Intel (USA)³. For chips with inferior technology, other companies start to act, such as the American Global Foundries (former spin-off of AMD), the also Taiwanese UMC and the Chinese SMIC. The dominance that TSMC exercises in this market is noteworthy – for the most advanced chips, with nodes smaller than 10 nm, it has 90% of market share. And even for lower nodes, the company has a dominant position, with a market share greater than 50%. Details in the chart below.

Graph 1 – Market share of non-integrated chipmakers by node, 2020 data